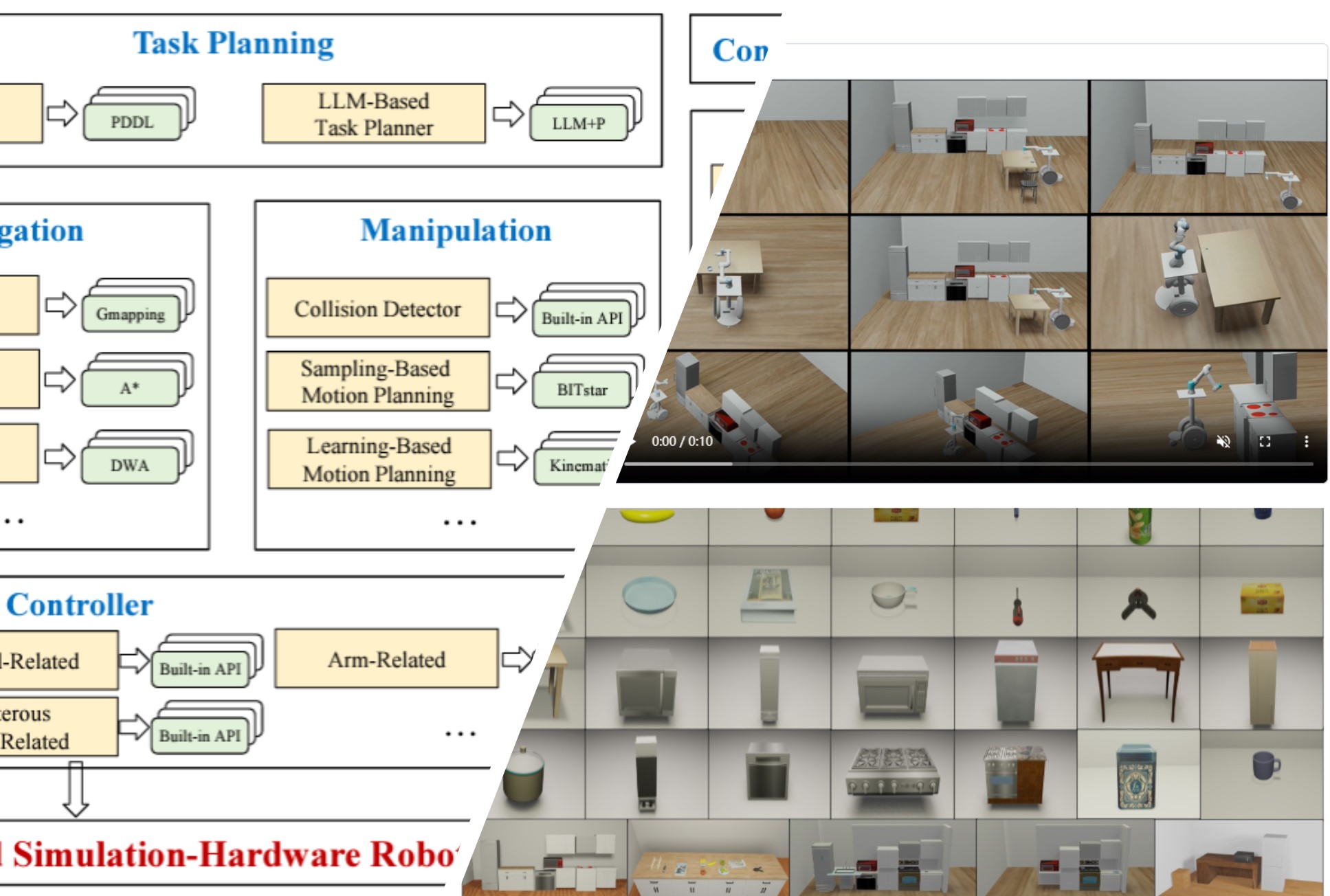

Simulation

BestMan_Pybullet is a simulation platform for mobile manipulators (featuring a wheeled base and robotic arm) built on PyBullet. It offers unified hardware APIs to streamline development and experimentation.

Learn More

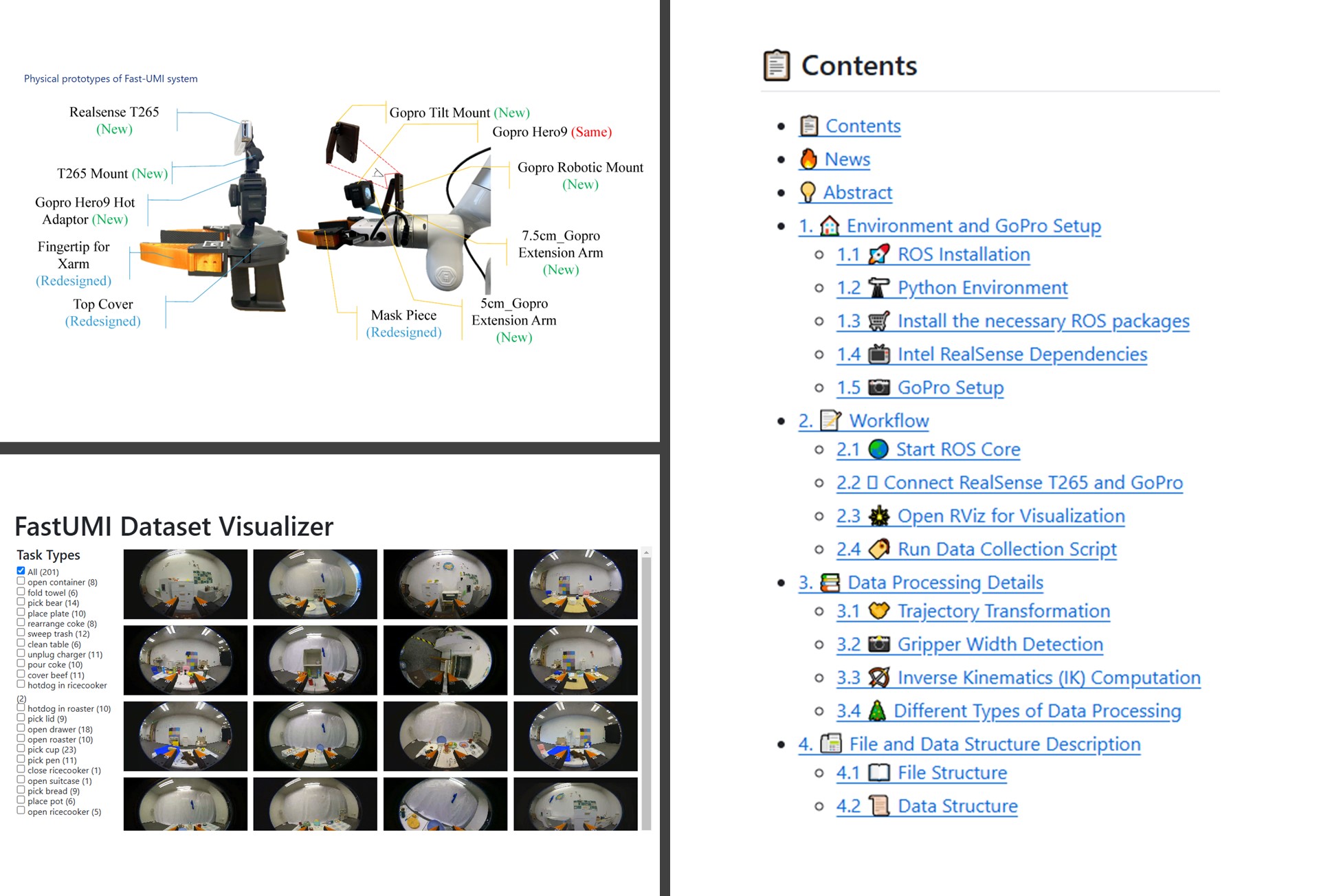

Real Data Collection

FastUMI is a scalable, hardware-independent universal manipulation interface designed to facilitate real-world data collection and accelerate research.

Learn More